Provider

provider "aws" {

alias = "tokyo"

region = "ap-northeast-1"

}

AWS

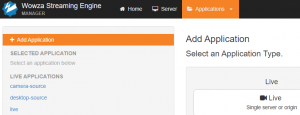

Wowza記録されたメディアをAWS S3にアップロードする

StandardUsing SaltStack to deploy Auto-scaling EC2

Standard配置...

AWS VPC point to point with gre tunnel

Standard配置...

AWS Cloudwatch query script for Zabbix

Standard脚本...

AWS VPC通过IPsec连接不同Region

Standard配置...

Dynamic DynamoDB

Standard链接...

How to use AWS ElasticCache on Azure

Standard配置...

A lookup table of the Azure and AWS

Standard对照表...

转载WordPress in The Cloud全

Standard视频...