Installation process …

Linux

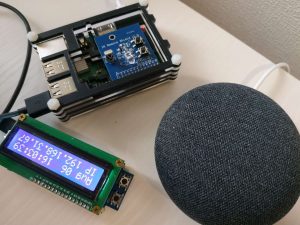

淘宝上买了IR模块和小的LCD

Standard

淘宝上买了IR模块和块LCD

加上之前买的那个グーグル…

准备有空时至少把家里的灯和电视都语音控制起来

Raspberry Pi 3B+でプリンタサーバーを構築する

Standard

まず,CUPS をインストールする

sudo apt install cups

Haproxy1.6 configuration file

Standardglobal

log /dev/log local0

log /dev/log local1 notice

chroot /var/lib/haproxy

user haproxy

group haproxy

maxconn 6000

daemon

tune.ssl.default-dh-param 2048

defaults

log global

mode http

option httplog

option dontlognull

timeout connect 5000

timeout client 50000

timeout server 50000

listen stats

bind 0.0.0.0:8080

mode http

stats enable

stats hide-version

stats realm Haproxy\ Statistics

stats uri /stats

stats auth username:password

frontend http_yemaosheng

bind *:80

mode http

default_backend web-nodes

frontend https_yemaosheng

#cat yemaosheng.crt yemaosheng.key | tee yemaosheng.pem

bind *:443 ssl crt /root/yemaosheng.pem

mode http

option httpclose

option forwardfor

reqadd X-Forwarded-Proto:\ https

default_backend web-nodes

backend web-nodes

mode http

balance roundrobin

option forwardfor

server web-1 10.0.1.2:80 check

server web-2 10.0.1.3:80 check |

使用免费的SSL

Standard公司收的一大堆论坛都要加SSL,每个都要购买的话会是一笔不小的费用。

所以准备全部使用Let’s Encrypt的免费SSL。

网站指向不同路径的nginx配置

Standard配置...

Add basic HTTP access auth via HAProxy

Standard配置...

haproxy cfg for redis sentinel

Standard配置...

Hive安装配置

Standard配置...

Hadoop集群安装配置

Standard配置...