See the Python code execution process clearly

Standardhttps://pythontutor.com/

Excel WEEKDAY関数で曜日に

StandardGitHub Actionsでファイルの存在や内容をチェックする

Standard.github/workflows/filecheck.yml

2022春節おめでとう

StandardA namespace is stuck in the Terminating state

Standardref:

https://www.ibm.com/docs/en/cloud-private/3.2.0?topic=console-namespace-is-stuck-in-terminating-state

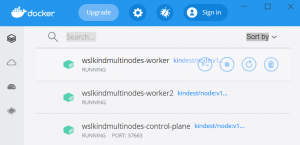

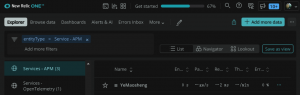

WSL2+Docker+Kind

Standardオフィスに行く

Standard

緊急事態期間のため、Web面接から会社には行ったことがない、完全にリモートで行いました。

今日はオンボーディングしてから初めて会社のオフィスに参りました。

こんなに早起きして電車に乗ったのは久しぶりだから。

今後もテレワークをしていくのがいいものだ。