配置...

配置

Jenkins安装配置

Standard步骤...

Hive安装配置

Standard配置...

Alpine Linux LNMP安装配置

Standard安装配置...

用Azure命令行配load balancer

Standard配置...

Sublime常用

Standard常用插件:

BracketHighlighter

CodeIntel

ConverToUTF8

DocBlockr

Emmet

SideBarEnhancements

Preferences.sublime-settings:

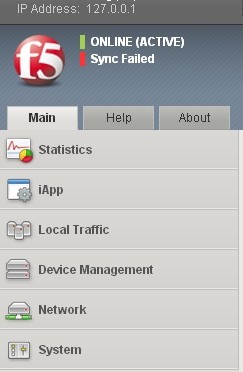

正在看F5的配置

Standard

Puppet安装配置使用

Standard安装配置使用...

Cisco复习(帧中继)

Standard一些命令和操作…

Cisco复习(VOIP)

Standard一些命令和操作…